There’s a huge difference between the purely academic exercise of training machine learning (ML) models versus building end-to-end data-science solutions to help solve real enterprise problems.

By Steven Astorino, Mark Simmonds

Editor's Note: This article is excerpted from chapter 5 of Artificial Intelligence: Evolution and Revolution

This chapter summarizes the lessons learned after two years of our team engaging with dozens of enterprise clients from different industries, including manufacturing, financial services, retail, entertainment, and healthcare, among others.

What are the most common ML problems faced by the enterprise? What is beyond training an ML model? How should data preparation be addressed? How do you scale to large datasets? Why is feature engineering so crucial? How do you go from a model to a fully capable system running in production? Do we need a data science platform if every data science tool is available through open source? These are some of the questions that get asked and addressed, exposing some challenges, pitfalls, and best practices through specific industry examples.

ML Is Not Only Training Models

We realized this is a pervasive misconception. When we interview aspiring data scientists, we usually ask:

“Say you’re given a dataset with certain characteristics with the goal of predicting a certain variable, what would you do?”

To our dismay, their answer is often something along these lines:

“I’ll split the dataset into training/testing, run logistic regression, random forests, support vector machines (SVMs), deep learning, XGBoost [and a few more unheard-of algorithms], and then compute precision, recall, F1 score [and a few more unheard-of metrics] to finally select the best model.”

But then we ask them:

“Have you even taken a look at the data? What if you have missing values? What if you have wrong values and/or bad data? How do you map your categorical variables? How do you do feature engineering?”

Here are the 10 lessons we see as helpful in creating an end-to-end ML system, including data collection, data curation, data exploration, feature extraction, model training, evaluation, and deployment.

1. You Must Have Data!

As data scientists, data is evidently our main resource. But sometimes, just getting the data can be challenging; it can take weeks or even months for the data science team to obtain the right data assets. Some of the challenges are:

- Access: Most enterprise data are very sensitive, especially when dealing with government, healthcare, and financial industries. Nondisclosure agreements (NDAs) are standard procedure when it comes to sharing data assets.

- Data dispersion: It’s not uncommon to see cases where data are scattered across different units within the organization, requiring approvals from not one but multiple parties.

- Expertise: Having access to the data is often insufficient as there may be so many sources that only a subject matter expert (SME) would know how to navigate the data lake and provide the data science team with the right data SMEs may also become a bottleneck for a data science project as they’re usually swamped with core enterprise operations.

- Privacy: Obfuscation and anonymization have become research areas on their own and are imperative when dealing with sensi- tive data.

- Labels: Having the ground truths, or labels, available is usually helpful as it allows the data science team to apply a wide range of supervised-learning algorithms. Yet, in some cases, labeling the data may be too expensive or labels might be unavailable due to legal restrictions. Unsupervised methods such as clustering are useful in these situations.

- Data generators: An alternative when data or labels are not available is to simulate them. When implementing data generators, it is useful to have some information on the data schema, the probability distributions for numeric variables, and the category distributions for nominal ones. If the data is unstructured, Tumblr™ is a great source for labeled images, while Twitter™ may be a great source for free text. Kaggle™ also offers a variety of datasets and solutions on a number of domains and industries.

2. Big Data Is Often Not So Big

This is a controversial one, especially after all the hype made by big data vendors in the past decade, who emphasize the need for scalability and performance. Nonetheless, we need to make a distinction between raw data (i.e., all the pieces that may or may not be relevant for the problem at hand) and a feature set (i.e., the input matrix to the ML algorithms used). The process of going from raw data to a feature set is called data preparation, and it usually involves these tasks:

- Discarding invalid/incomplete/dirty data, which, in our experience, could be up to half of the records available

- Aggregating one or more datasets, including performing operations such as joins and group aggregators

- Selecting/extracting features desired and removing features that may be irrelevant (such as unique IDs) and applying other dimensionality-reduction techniques such as principal component analysis (PCA)

- Using sparse data representation or feature hashers to reduce the memory footprint of datasets with many zero values

After all the data preparation steps have been completed, it’s not hard to realize that the final feature set, which will be the input of the ML model, will be much smaller; and it is not uncommon to see cases where in-memory frameworks such as R (a programming language for statisticians and data miners) or scikit-learn (a machine-learning library for Python™) are sufficient to train models. In cases where even the feature set is huge, big data tools such as Apache™ Spark™ may come in handy; however, they may have a limited set of algorithms available.

3. Beware of Dirty Data

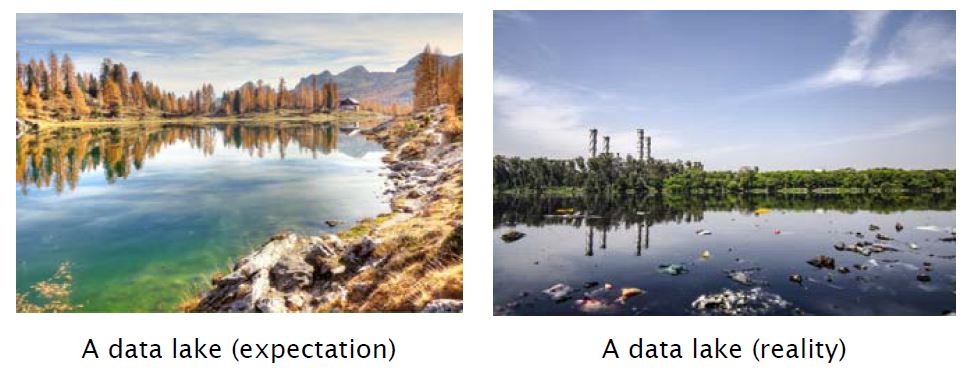

We can’t emphasize this enough. Data is dirty. In most engagements, clients are proud and excited to talk about their data lakes: how beautiful their data lakes are, and how many insights they can’t wait to get out of them. However, the vision of a data lake often does not reflect the reality, as depicted in Figure 5.1.

Figure 5.1: Data lake—expectation versus reality

(Images by kordula vahle, left, and Yogendra Singh, right, from Pixabay)

This is where scalable frameworks such as Apache Spark are important as data curation transformations will need to be performed on raw data. A few typical curation tasks are:

- Outlier detection: A negative age, a floating-point zip code, or a credit score of zero are just a few examples of invalid Not correcting such values may introduce high bias when training the model.

- Missing/incorrect value imputation: The obvious way to address missing/incorrect values is to simply discard them. An alternative is imputation; that is, to replace missing/incorrect values by the mean, median, or mode of the corresponding attribute. Another option is interpolation, which refers to building a model to predict the attribute with missing values. Finally, domain knowledge may also be used for imputation. Say we’re dealing with patient data and there’s an attribute indicating whether a patient has had If such information is missing, one could look into the appointments dataset and find out whether the patient has had any appointments with an oncologist.

- Dummy coding and feature hashing: These are useful to turn categorical data into numerical data, especially for coefficient-based algorithms. Say there’s an attribute state, which indicates states of the USA (e.g., FL, CA, AZ). Mapping FL to 1, CA to 2, AZ to 3, and so forth introduces order and magnitude. For example, AZ would be greater than FL, and CA would be twice as big as FL. One-hot encoding—also called dummy coding— addresses this issue by mapping a categorical column into multiple binary columns, one for each category value.

- Scaling: Coefficient-based algorithms experience bias when features are in different scales. Say age is given in years between 0 and 100, whereas salary is given in dollars between 0 and 100,000.00. The optimization algorithm may assign more weight to salary, just because it has a higher absolute magnitude. Consequently, normalization is usually advisable, and common methods include z-scoring or standardization (when the data is considered normal) and min-max feature scaling.

- Binning: Mapping a real-valued column into different categories can be useful, for example, to turn a regression problem into a classification one. Say you’re interested in predicting arrival delay of flights in minutes. An option would be to predict whether the flight is going to be early, on time, or late, defining ranges for each category.

4. It’s All About Feature Engineering

In a nutshell, features are the characteristics from which the ML algorithm will learn. As expected, “noisy” or irrelevant features can affect the quality of the model, so it is critical to have good features. These are a few strategies for feature engineering:

- Define what you want to predict. What would each instance represent? A customer? A transaction? A patient? A ticket? Make sure each row of the feature set corresponds to one instance.

- Avoid unique IDs. Not only are they irrelevant in most cases, but they can lead to serious “overfitting,” especially when applying algorithms such as XGBoost. Overfitting is described later, but as an example, a model might be selected by maximizing its performance on some set of training data (https://en.wikipedia.org/wiki/Training_validation,_and_test_sets#training_set), and yet its suitability might be determined by its ability to perform well on unseen data; then overfitting occurs when a model begins to “memorize” training data rather than “learning” to generalize from a trend.

- Use domain knowledge to derive new features that help measure success/failure. The number of hospital visits may be an indicator of patient risk; the total amount of foreign transactions in the past month may be an indicator of fraud; the ratio of the requested loan amount to the annual income may be an indicator of credit risk.

- Use natural language processing (NLP) techniques to derive features from unstructured free text. Some examples are Latent Dirichlet Allocation, or LDA (a generative statistical model that allows sets of observations to be explained), Term Frequency- Inverse Document Frequency, or TF-IDF (a numerical statistic that is intended to reflect how important a word is to a document), word2vec (models that are used to produce word embeddings), and doc2vec (an extension to word2vec).

- Use dimensionality reduction if the number of features is very large; examples include principal component analysis, or PCA (an orthogonal linear transformation) and t-Distributed Stochastic Neighbor Embedding, or t-SNE (a technique for dimensionality reduction).

5. Anomaly Detection Is Everywhere

If we were to pick one single most common ML use case in the enterprise, that would be anomaly detection. Whether we’re referring to fraud detection, manufacturing testing, customer churn, patient risk, customer delinquency, system crash prediction, or so forth, the question is almost always “Can we find the needle in the haystack?” This leads to our next topic, which relates to unbalanced datasets.

A few common algorithms for anomaly detection include:

- Auto-encoders

- One-class classification algorithms, such as a one-class support vector machine (SVM)

- Confidence intervals

- Clustering

- Classification using over-sampling and under-sampling

Look for the next five lessons in an upcoming issue of MC Systems Insight. Can't wait? Pick up your copy of, at the MC Press Bookstore Today!

More than ever, there is a demand for IT to deliver innovation. Your IBM i has been an essential part of your business operations for years. However, your organization may struggle to maintain the current system and implement new projects. The thousands of customers we've worked with and surveyed state that expectations regarding the digital footprint and vision of the company are not aligned with the current IT environment.

More than ever, there is a demand for IT to deliver innovation. Your IBM i has been an essential part of your business operations for years. However, your organization may struggle to maintain the current system and implement new projects. The thousands of customers we've worked with and surveyed state that expectations regarding the digital footprint and vision of the company are not aligned with the current IT environment. TRY the one package that solves all your document design and printing challenges on all your platforms. Produce bar code labels, electronic forms, ad hoc reports, and RFID tags – without programming! MarkMagic is the only document design and print solution that combines report writing, WYSIWYG label and forms design, and conditional printing in one integrated product. Make sure your data survives when catastrophe hits. Request your trial now! Request Now.

TRY the one package that solves all your document design and printing challenges on all your platforms. Produce bar code labels, electronic forms, ad hoc reports, and RFID tags – without programming! MarkMagic is the only document design and print solution that combines report writing, WYSIWYG label and forms design, and conditional printing in one integrated product. Make sure your data survives when catastrophe hits. Request your trial now! Request Now. Forms of ransomware has been around for over 30 years, and with more and more organizations suffering attacks each year, it continues to endure. What has made ransomware such a durable threat and what is the best way to combat it? In order to prevent ransomware, organizations must first understand how it works.

Forms of ransomware has been around for over 30 years, and with more and more organizations suffering attacks each year, it continues to endure. What has made ransomware such a durable threat and what is the best way to combat it? In order to prevent ransomware, organizations must first understand how it works. Disaster protection is vital to every business. Yet, it often consists of patched together procedures that are prone to error. From automatic backups to data encryption to media management, Robot automates the routine (yet often complex) tasks of iSeries backup and recovery, saving you time and money and making the process safer and more reliable. Automate your backups with the Robot Backup and Recovery Solution. Key features include:

Disaster protection is vital to every business. Yet, it often consists of patched together procedures that are prone to error. From automatic backups to data encryption to media management, Robot automates the routine (yet often complex) tasks of iSeries backup and recovery, saving you time and money and making the process safer and more reliable. Automate your backups with the Robot Backup and Recovery Solution. Key features include: Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online