Considering that the IT industry has become hooked on the idea of straight-through processing and real-time information, it's surprising to discover that few people are aware of how job scheduling can help achieve this goal. The majority of enterprise computing still consists of batch job processing, yet its potential to impact overall business objectives is being overlooked. In most data centers, little or no effort has been put into improving this vital component of IT operations.

In recent years, critical innovations in job scheduling have resulted in the ability to shrink the batch window. This leads to significant cost-savings and can help facilitate the growth of the real-time enterprise. Without the need for additional hardware, more jobs can be added to the enterprise workload, and measurable efficiency can be achieved. At the same time, enterprises gain more breathing room to recover from errors and ensure that Service Level Agreements (SLAs) are met. ROI-hungry data center managers should consider the following relatively simple concepts that are embodied in today's innovative job schedulers.

1. Event-Driven Job Scheduling

The majority of job schedulers were originally designed to automate the human processes that were required to schedule the data center's workload. These systems basically automated a daily run-sheet. In the early days, when workloads were smaller and more predictable, this process was sufficient. However, many of these systems are still in use today, and their functionality falls far short of what is required in the real-time enterprise (RTE). A more advanced form of event-driven job scheduling is essential; the RTE requires something that can intuitively respond to events and calendar requirements such as date and time. Consider the following:

- Web Services

- File or dataset activity

- Built-in file transfers between agents or FTP servers

- Third-party applications

- Spool file contents

- Text file contents

- Database activity (issuance of SQL commands)

- IP activity

- CPU resources

- Disk resources

- Memory resources

Event-driven also refers to the internal architecture of the scheduling solution and the way it manages workload. Traditional (older) job schedulers poll a database or other data source at fixed time intervals to determine if and when a job is complete before releasing a subsequent job. The result is slack time, or latency, which creates longer batch processing time and can run the risk of violating SLAs.

Job schedulers using an event-driven architecture save valuable time. Whenever a monitored event occurs, messages are automatically sent between components. There is no idle time while a database is being polled, and the latency between a job ending and its successor starting is eliminated. This type of "push" process reduces the batch window significantly.

2. Design Business Processes, Not Just Schedules

Business applications usually require that suites of inter-related jobs be executed in a particular sequence, yet individual jobs within these suites may have different run times. A job suite running every 15 minutes, for example, may contain a single job that needs to run only every three hours. Or a daily job stream may contain jobs that need to run only at a specified time each month or at the end of a financial quarter.

In traditional job scheduling, dependencies between jobs have to be hard-coded, and all the jobs in a suite must have the same run frequency. This means that unique job streams must be created for jobs and dependencies running at different times or frequencies. More manual effort is required, which can result in an increase in errors. Instead of defining a business process, administrators are writing daily run-sheets for each business application.

Advanced job scheduling simplifies this process with a single definition for each business application. Different run frequencies can be specified for each job, and jobs can span any supported execution platform. Maintaining multiple schedules is unnecessary, and costly integration is eliminated. Since all scheduling operations can be performed across platforms from a single point of control, there are fewer errors and jobs can be viewed as business applications.

As the constraints of scheduling by calendar and platform are eliminated, jobs can be grouped together and defined explicitly to represent business applications. In this way, they become much more meaningful to the business. Administrators can set application-specific SLAs and perform impact analysis at the business application level. More importantly, IT departments become more responsive and accountable to the needs of business users.

Manually hard-coding schedules for every permutation of time and date is a time-consuming and error-prone process. With advanced job scheduling, each job is defined once, and the scheduler automatically determines which jobs to run on a particular date. This reduces the number of jobs to be managed, and changes can be implemented more quickly. As a result, the time it takes to execute batch processing is greatly reduced and large, complex IT environments become easier to manage.

Traditional Time-Based Job Scheduling vs. Business Process-Based Job Scheduling

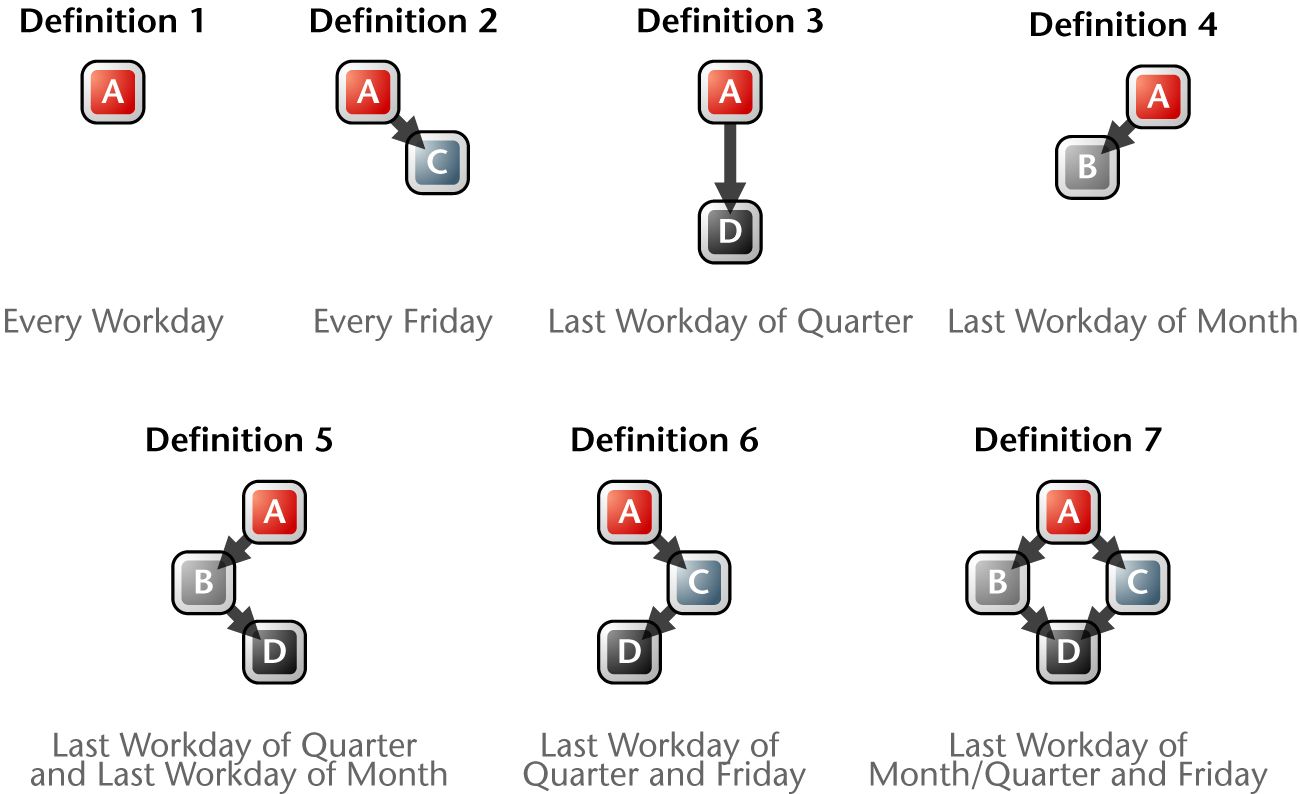

In the following diagram, you can see how traditional job scheduling becomes increasingly complex as administrators are forced to hard-code job definitions based on various combinations of time and date.

(Click images to enlarge.)

By intelligently determining which process should run based on specified conditions, automated event-driven job scheduling reduces the total number of definitions required. There is less duplication, fewer errors, and less risk of SLA failures. And there is a clearer view of the business process being supported.

3. Managing and Expediting the Critical Path

The critical path is just that--critical. In a schedule, the string of dependent jobs that take the longest time to complete make up the critical path, which determines how long a business process takes to run. Your job scheduler should be able to automatically analyze the critical path and alert you to any possible breaches that can result in SLA violations. To ensure that the critical path is met, it should also be able to automatically lower the priority of jobs not on the critical path in favor of those that are.

Advanced job schedulers give administrators the ability to manage the critical path and define criteria that give the most important jobs the highest priority (e.g., overdue, etc.). These jobs will execute sooner and run faster. They automatically borrow resources from less important jobs to ensure that they take the fastest possible route through schedules and processes. The result is an increase in SLA integrity and a shortened batch window.

A single advanced job scheduling solution offers an alternative to the inefficient multiple-product environment that still prevails in most data centers. Some forward-thinking companies have adopted innovative schedulers, but far too many have spent little time or effort in upgrading this vital IT component.

Optimize Efficiency and Decrease Costs

Data center managers have a fantastic opportunity to optimize efficiency and decrease costs by making a relatively minor investment in the emerging technology of event-based job scheduling. They can minimize their mission-critical batch processes and integrate them with such real-time technologies as Web Services. More importantly, they will be implementing a 21st century job scheduling solution that has the robust flexibility to meet future business objectives in an increasingly fast-paced, global economy.

Ray Nissan founded Cybermation in 1982. He has more than 25 years of information technology experience. He holds an Honors Bachelors Degree in Physics from the University of London, England, and continues to be an Associate of the Royal College of Science (ARCS). Nissan’s vision and forward-thinking approach has provided the leading-edge technology base for Cybermation.

More than ever, there is a demand for IT to deliver innovation. Your IBM i has been an essential part of your business operations for years. However, your organization may struggle to maintain the current system and implement new projects. The thousands of customers we've worked with and surveyed state that expectations regarding the digital footprint and vision of the company are not aligned with the current IT environment.

More than ever, there is a demand for IT to deliver innovation. Your IBM i has been an essential part of your business operations for years. However, your organization may struggle to maintain the current system and implement new projects. The thousands of customers we've worked with and surveyed state that expectations regarding the digital footprint and vision of the company are not aligned with the current IT environment. TRY the one package that solves all your document design and printing challenges on all your platforms. Produce bar code labels, electronic forms, ad hoc reports, and RFID tags – without programming! MarkMagic is the only document design and print solution that combines report writing, WYSIWYG label and forms design, and conditional printing in one integrated product. Make sure your data survives when catastrophe hits. Request your trial now! Request Now.

TRY the one package that solves all your document design and printing challenges on all your platforms. Produce bar code labels, electronic forms, ad hoc reports, and RFID tags – without programming! MarkMagic is the only document design and print solution that combines report writing, WYSIWYG label and forms design, and conditional printing in one integrated product. Make sure your data survives when catastrophe hits. Request your trial now! Request Now. Forms of ransomware has been around for over 30 years, and with more and more organizations suffering attacks each year, it continues to endure. What has made ransomware such a durable threat and what is the best way to combat it? In order to prevent ransomware, organizations must first understand how it works.

Forms of ransomware has been around for over 30 years, and with more and more organizations suffering attacks each year, it continues to endure. What has made ransomware such a durable threat and what is the best way to combat it? In order to prevent ransomware, organizations must first understand how it works. Disaster protection is vital to every business. Yet, it often consists of patched together procedures that are prone to error. From automatic backups to data encryption to media management, Robot automates the routine (yet often complex) tasks of iSeries backup and recovery, saving you time and money and making the process safer and more reliable. Automate your backups with the Robot Backup and Recovery Solution. Key features include:

Disaster protection is vital to every business. Yet, it often consists of patched together procedures that are prone to error. From automatic backups to data encryption to media management, Robot automates the routine (yet often complex) tasks of iSeries backup and recovery, saving you time and money and making the process safer and more reliable. Automate your backups with the Robot Backup and Recovery Solution. Key features include: Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online